Abstract

High-quality driving video generation is crucial for providing training data for autonomous driving models. However, current generative models rarely focus on enhancing camera motion control under multi-view tasks, which is essential for driving video generation. Therefore, we propose MyGo, an end-to-end framework for video generation, introducing motion of onboard cameras as conditions to make progress in camera controllability and multi-view consistency. MyGo employs additional plug-in modules to inject camera parameters into the pre-trained video diffusion model, which retains the extensive knowledge of the pre-trained model as much as possible. Furthermore, we use epipolar constraints and neighbor view information during the generation process of each view to enhance spatial-temporal consistency. Experimental results show that MyGo has achieved state-of-the-art results in both general camera-controlled video generation and multi-view driving video generation tasks, which lays the foundation for more accurate environment simulation in autonomous driving.

Method

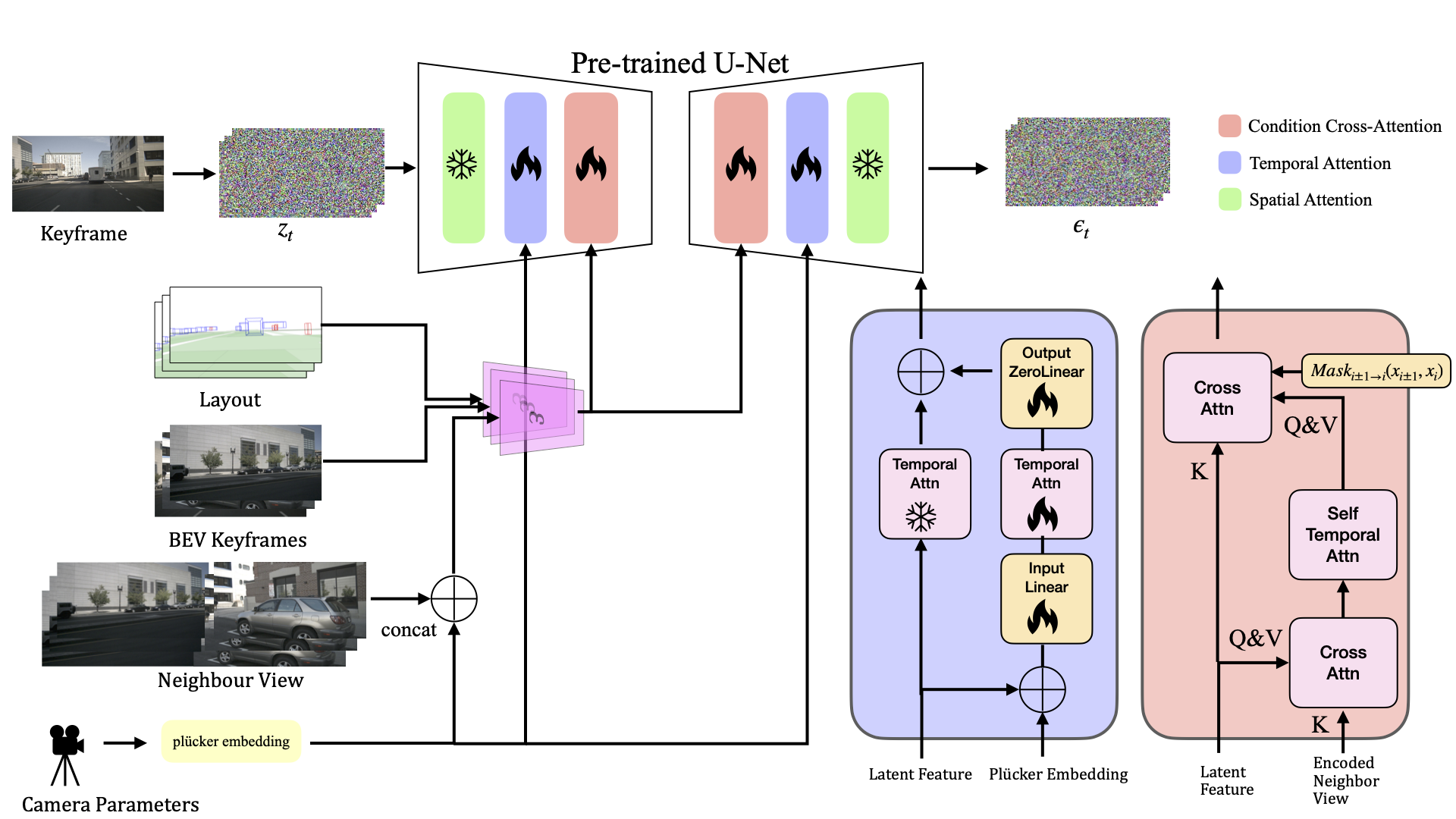

MyGo takes BEV map, 3D bounding boxes, neighbour view, keyframes and camera parameters as conditions, and uses a unified encoder to process the conditions. The encoded conditions are further integrated into U-Net by a condition cross-attention block. We design a ControlNet like structure to inject camera plu ̈cker coordinates into pre-trained U-Net blocks. Moreover, in neighbour view cross-attention block, we use epipolar geometry as a constraint to guide the calculation of cross-attention.

Results

We conducted experiments on the RealEstate10K and nuScenes datasets. On the RealEstate10K dataset, we achieved precise control of the camera position and high-quality video generation through the camera control module. On the nuScenes dataset, we further enhanced the spatial consistency of the generated results using the neighbour view control module.

RealEstate10K

Driving video generation with multi-view consistency on nuScenes

After removing the neighbour view module, the consistency cannot be maintained, as seen in the last few frames of the left-front view in the first example and the truck in the right-front view in the second video.

MyGo with epipolar-geometry based neighbour view conditioning module

MyGo without neighbour view conditioning module

Motion Editable Generation on nuScenes

Quantative Results