Abstract

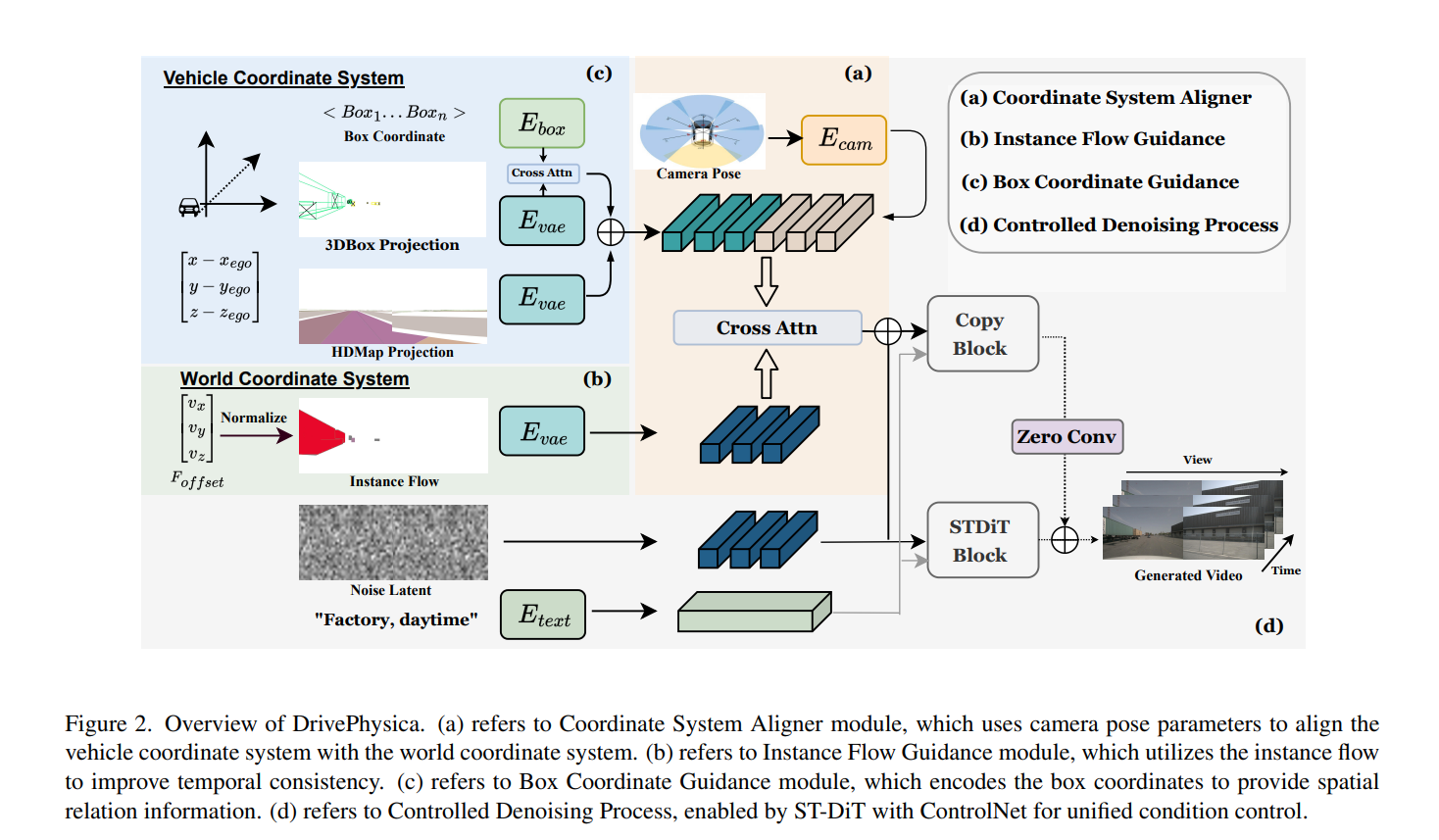

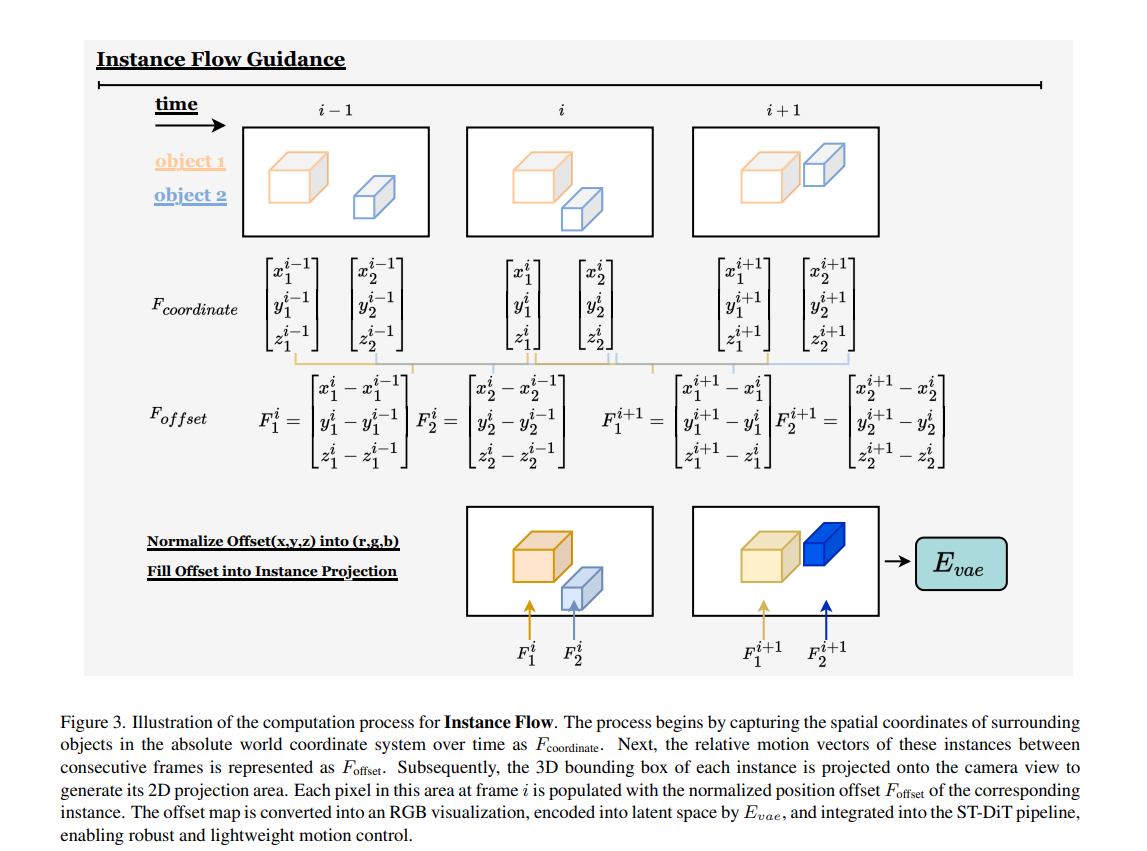

Autonomous driving requires robust perception models trained on high-quality, large-scale multi-view driving videos for tasks like 3D object detection, segmentation and trajectory prediction. While world models provide a cost-effective solution for generating realistic driving videos, challenges remain in ensuring these videos adhere to fundamental physical principles, such as relative and absolute motion, spatial relationship like occlusion and spatial consistency, and temporal consistency. To address these, we propose DrivePhysica, an innovative model designed to generate realistic multi-view driving videos that accurately adhere to essential physical principles through three key advancements: (1) a Coordinate System Aligner module that integrates relative and absolute motion features to enhance motion interpretation, (2) an Instance Flow Guidance module that ensures precise temporal consistency via efficient 3D flow extraction, and (3) a Box Coordinate Guidance module that improves spatial relationship understanding and accurately resolves occlusion hierarchies. Grounded in physical principles, we achieve state-of-the-art performance in driving video generation quality (3.96 FID and 38.06 FVD on the Nuscenes dataset) and downstream perception tasks.